As more and more data being collected everywhere from pretty much everything a user do, such as transactions activities, social interactions, information search ... enterprises has been actively looking into ways to turn these vast amount of raw data into useful information.

BI process flow

It include the following stages of processing

- ETL: Extract operational data (inside enterprise or external sources) into data warehouse (typically organized in Star/Snowflake schema with Fact and Dimension tables).

- Data exploration: Get insight into data using simple visualization tools (e.g. histogram, summary statistics) or sophisticated OLAP tools (slice, dice, rollup, drilldown)

- Report generation: Produce executive reports

- Data mining: Extract patterns of the underlying data to form models (e.g. bayesian networks, linear regression, neural networks, decision trees, support vector machines, nearest neighbors, association rules, principal component analysis)

- Feedback: The model will be used to assist business decision making (predicting the future)

The gap of processing BIG dataMany data mining and machine learning algorithms are available in both commercial packages (e.g. SAS, SPSS) as well as open source libraries (e.g. Weka, R). Nevertheless, most of these ML algorithms implementation are based on fitting al data in memory and not designed to process big data (e.g. Tera byte data volume).

On the other hand, massively parallel processing platform such as

Hadoop, Map/Reduce, over the last few years, has been proven in processing Terabyte or even Petabyte range of data. Although many

sequential algorithm can be restructured to run in map reduce, including

a big portion of machine learning algorithm, there isn't a corresponding parallel implementation of ML available in massively parallel form.

Approach 1: Apache MahoutOne approach is to "re-implement" the ML algorithm in Map/Reduce and this is the path of

Apache Mahout project. Mahout seems to have

implemented an impressive list of algorithms although I haven't used them for my projects yet.

Approach 2: Ensemble of parallel independent learnersThis is an alternative path that doesn't require re-implementation of existing algorithms. It works in the following way.

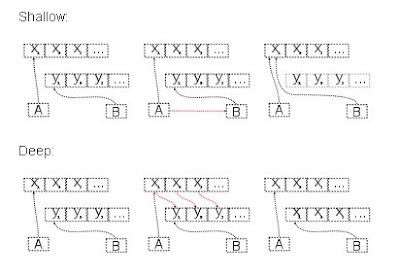

- Draw samples from the Big data into many sample data sets, which can fit into the memory of a single, individual learner.

- Assign each sample data set to an individual learner, who use existing algorithms to learn the model. After learning, each individual learner keep their own learned model

- When a decision / prediction request is received, each individual learner will come up with its own prediction and then combine their results in some ways. (e.g. for classification task, the learners will vote for the predicted class and the majority wins. for regression, the average of the estimate values will be used to predict the output value)

I also found this approach can smoothly fade out outdated model. As user's behavior may change over time, same happens to the validity of a learned model. With this ensemble approach, I can have multiple learners each learn their model periodically. Everytime when a prediction is needed, I will pick the latest k models and combine the final prediction based on a time-decayed weighted voting model. Outdated model will automatically slide out the k-size window automatically.

One gotchas of sampling approach is the handling of rare events (since you may lost those rare events in sampling). In this case, stratified sampling (instead of simple random sampling) should be used.